Generative AI - Three Levels of Expertise

There are three levels of understanding of Generative AI,

Level 0 - Knows the term "Generative AI" and does not understand weather its yet another cosmetic term or its for real

Level 1 - Knows that business impact and possible business use cases - GPT (Generative Pretrained Model) is the biggest innovation we have in NLP domain in decades whereby machines can do some form of reasoning using LLM trained models which are trained over massive amount of data using expensive infrastructure(GPUs) and time factor involved. Plus this reasoning can be combined with RAG and FINETUNING Methods to bring in custom knowledge base as well so that to customize the technology to your knowledge domain.

Level 3 - Knows at the research level on how this Transformer part works in GPT to devise reasoning and what are hallucinations and what are the limitations of this technology and what areas are being explored and what are the constraints in current models.

By all means GenAI is game changer, any task you do can be boosted with GenAI,

- CODING: boost your productivity for normal reasoning tasks: ask the GenAI to generate a for loop in python 10 levels deep where each level should use iteration depth as variable name and print the value at 7th depth and see it created for you ! save time and some part of the your thinking capacity as well.

- PRESENTATION / CONTENT WRITING: ask GenAI to generate rephrase the document (better copy paste-r), ask it to generate the entire document.

- GRAPHICAL: again generate images using GenAI or modify existing ones (you can expect soon to alleviate common

Where do you stand in your GenAI Journey?

Video Go through for using existing LLM with RAG method: Link

Design Architecture Blueprint for IOT and AI based Smart-Cities

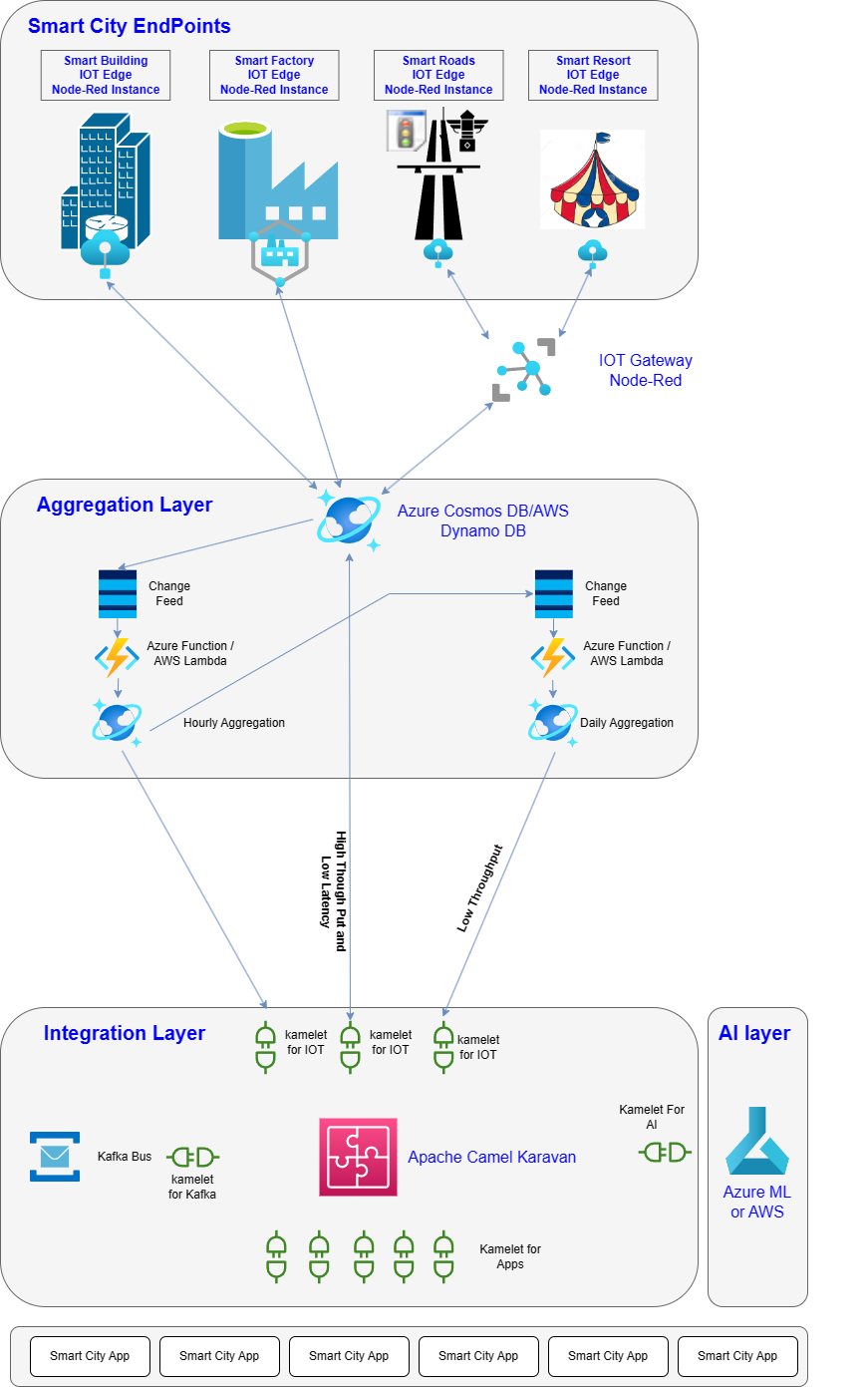

Smart City is all about interactive and futuristic city which leverages IOT and connectivity to enable scenarios that were not possible in the past, both new and existing cities can benefit from emerging use cases of smart cities. This blog post focuses rather on actually how at platform level smart city can be implemented and what technologies are needed and where exactly to place them to achieve the overall smart city.

Platform Architecture

Core of the smart city is IOT, small smart computer devices with sensors collect and continuously send and receive data to/from the centralized hub point. This usually is high throughput data and requires low latency so that events can be processed as real-time as the technology allows. This is why centralized Azure Cosmos DB or AWS Dynamo DB is used as aggregation point, these are NOSQL databases optimized for insertion and reside on cloud scale from these principal cloud providers. There is also a concept of gateway for IOT, which sometimes is required to use single point to tunnel data towards aggregate layer, which is often necessary for certain types of end points. The platform solution used for IOT is IBM Node-RED because its small javascript footprint solution which can easily be hosted in IOT limited compute resources.

At the aggregation layer, before data goes into integration layer, we often times need to work on data which is aggregated, like hourly sum or sum of whole day of values etc. AWS Lambdas or Azure Functions both can be leveraged to perform aggregation.

Integration layer basic goal is just to connect multiple systems. We cannot use Node-Red here, but we required a performant system here which can scale both horizontally and vertically. So here we use Apache Camel Karavan (open source GUI based integration platform: see footnotes for more info on it). Kubernetes is base platform to host Karavan solution for cloud scale elasticity. We have connectors for each technology to allow development of all types of flows between applications.

Besides Integration layer is AI layer which basically takes data from Integration layer. You might need to perform some traffic prediction per hour for example so data would be flowing from aggregation layer all the way to integration and then to ML component of AI layer to generate and tune the model for traffic per hour as example.

Lastly the Apps for smart cities which implement the use cases leverages information from integration layer as well as AI layer to perform their tasks.

Further Read:

Apache Camel Karavan Technical Review: SRK-TechBlog | Review of Apache Camel Karavan as GUI Based Integration Platform (emetalsoft.com)